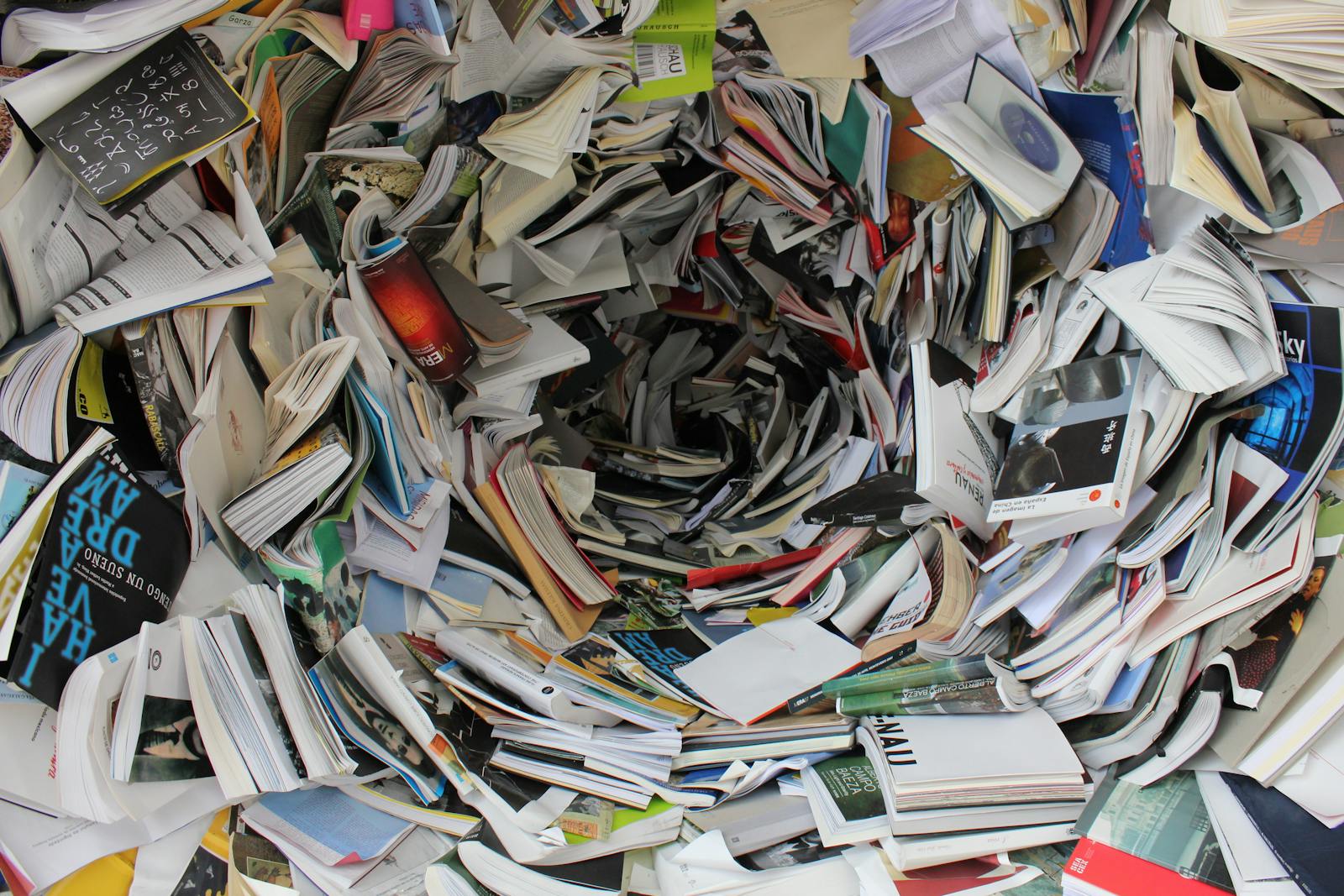

Artificial intelligence is advancing at a breathtaking pace — but behind the breakthroughs sits a growing problem researchers warn could undermine the entire field: a flood of low-quality, poorly reviewed, hastily generated, or outright AI-written scientific papers.

In academic circles, this is known as the “AI slop problem.”

And it’s getting worse.

Conferences are overwhelmed with submissions. Journals are struggling to distinguish real research from machine-generated noise. Peer reviewers are burnt out. Students are submitting AI-generated papers without disclosure. And the publish-or-perish culture across universities is turbocharging the chaos.

Academics say the field is at risk of becoming a mess of repetition, low-value findings, misleading claims, and artificial inflation of research output — making it harder for high-quality research to shine and for the broader community to identify what truly matters.

Here’s a deeper look at the crisis — and why the solution isn’t as simple as banning AI tools.

🧠 How AI Created Its Own Research Quality Crisis

AI systems like ChatGPT, Claude, and Gemini have made writing faster than ever.

But when applied to scientific publishing, this creates several major issues.

1. Too Many Papers, Not Enough Reviewers

Top AI conferences like NeurIPS and ICML saw explosive growth:

- thousands more submissions each year,

- entire sessions dedicated to near-duplicate papers,

- reviewers overwhelmed with low-quality drafts,

- record rejection rates without improved clarity.

Reviewers simply cannot keep up, leading to rushed reviews or superficial acceptance.

2. AI-Generated Papers Are Hard to Spot

AI makes it easy to generate:

- synthetic abstracts,

- plausible but incorrect experiments,

- fabricated citations,

- rewritten versions of existing work.

These papers look polished — but lack rigor.

Worse, many reviewers now admit:

“We can’t reliably tell which papers were written by humans.”

3. The Rise of “Paper Mills”

Some online services now:

- sell AI-written research papers,

- fabricate data,

- generate diagrams,

- produce multiple versions of the same study.

These papers often end up in low-tier journals, inflating CVs and corrupting the scientific record.

4. Pressure on Students and Academics

Academic incentives reward:

- number of publications,

- speed of output,

- flashy results.

AI tools allow researchers to “multiply” publications, leading to:

- more noise,

- less originality,

- a culture of quantity over quality.

This harms early-career researchers trying to produce authentic, rigorous work.

🔍 What’s Actually Inside the Slop? Common Patterns Emerging

Researchers say low-quality AI papers often share certain features:

A. Overclaiming Results

AI-written papers often assert:

- breakthroughs without evidence,

- impact without experimentation,

- novelty where none exists.

B. Hallucinated Citations

Some citations reference:

- nonexistent studies,

- wrong page numbers,

- fake authors,

- mismatched topics.

C. Repetitive Content

Multiple papers rephrase:

- existing methods,

- established findings,

- widely known techniques

but present them as new contributions.

D. Lack of Reproducibility

Many papers lack:

- open datasets,

- code,

- experimental details,

- statistical rigor.

Reproducibility becomes almost impossible.

🧪 How This Affects Real Scientific Progress

This problem isn’t just academic bureaucracy — it affects the future of AI.

1. It Slows Down Real Research

Scientists waste time:

- reviewing junk papers,

- sorting through noise,

- verifying questionable claims.

Time spent filtering slop is time not spent innovating.

2. It Misleads Policymakers and the Public

Poorly vetted papers can:

- exaggerate AI capabilities,

- falsely report dangers or breakthroughs,

- influence regulation based on inaccurate data.

This shapes public perception in harmful ways.

3. It Undermines Trust in AI Research

When academic literature becomes polluted:

- top scientists disengage,

- journals lose credibility,

- students mimic low standards.

A field built on shaky foundations cannot withstand scrutiny.

📉 What the Original Article Didn’t Cover — The Systemic Causes Behind the Crisis

A. The Incentive Problem

Academia rewards:

- quantity over quality,

- novelty over reproducibility,

- publication over verification.

AI just accelerates these distortions.

B. Conference Culture

AI conferences exploded into massive events where:

- many papers are rushed,

- reviewers are unpaid volunteers,

- decisions are sometimes inconsistent.

The system was fragile even before generative AI arrived.

C. Lack of AI-Verification Tools

There is no:

- reliable AI-authorship detector,

- system to verify reproducibility,

- citation accuracy scanner,

- cross-journal integrity database.

The infrastructure simply doesn’t exist.

D. Venture-Backed Influence

Some labs publish “marketing papers” to:

- attract investors,

- justify valuations,

- boost model credibility.

Academic publishing becomes a strategic tool, not a scientific one.

E. Globalization of AI Research

An enormous number of institutions now produce AI work:

- universities with low resources,

- private research outfits,

- commercial AI teams,

- government-funded labs.

Quality varies widely.

🛠️ How Researchers Are Trying to Fix the Mess

1. Requiring Code and Data Releases

If experiments can be reproduced, sloppy papers are exposed.

2. AI-Aided Review Tools

Ironically, AI may help filter AI-made slop:

- citation verifiers

- originality detectors

- data-quality checks

- method cross-checking

3. Stronger Reviewer Incentives

Paying reviewers or offering professional credit improves quality.

4. More Tiered Publishing

Separating:

- exploratory papers

- rigorous studies

- industry reports

helps reduce mixed-quality standards.

5. Penalties for AI Fraud

Some journals now ban:

- undisclosed AI writing,

- fabricated data,

- AI-written peer reviews.

More enforcement is coming.

🌍 The Bigger Picture: AI Research Is Growing Faster Than Academia Can Handle

AI is the first scientific field where:

- AI helps create the research,

- AI helps publish it,

- AI helps evaluate it,

- AI helps detect flaws in it.

This creates a loop that traditional peer review is not built for.

The slop problem is not a sign of decline —

it is a sign of hyper-growth.

But without guardrails, the field risks drowning in its own output.

❓ Frequently Asked Questions (FAQs)

Q1: What exactly is “AI slop”?

Low-quality, AI-generated, repetitive, or fraudulent research papers that add noise instead of value to the scientific community.

Q2: Why is this happening now?

Because AI tools make writing easier, academic incentives push for quantity, and conferences lack the capacity to review the surge.

Q3: Are AI detectors reliable?

No. AI-authorship detection is highly unreliable and often produces false positives.

Q4: Is using AI in research unethical?

Not inherently — but failing to disclose its use, fabricating findings, or letting AI generate false claims is unethical.

Q5: Are top journals affected?

Yes. Even prestigious conferences and journals report a rising number of low-quality submissions.

Q6: Could this harm the future of AI?

If left unchecked, it could slow down real progress, mislead the public, and erode scientific trust.

Q7: What can journals do to fix the problem?

Implement stricter review standards, require reproducibility, use AI verification tools, and penalize misconduct.

Q8: Is this problem unique to AI research?

No — biology, medicine, and climate science are beginning to face similar waves of AI-generated slop.

✅ Final Thoughts

The AI research slop crisis is real — and it highlights a deeper truth:

Scientific systems built for the 20th century are not ready for the pace of 21st-century AI.

The solution isn’t banning AI.

It’s building new academic structures capable of handling an era where:

- papers can be written in hours,

- experiments can be simulated instantly,

- global talent contributes simultaneously.

If we can modernize research culture, AI could usher in a golden age of discovery.

If not, we risk burying real breakthroughs under an avalanche of noise.

Sources The Guardian