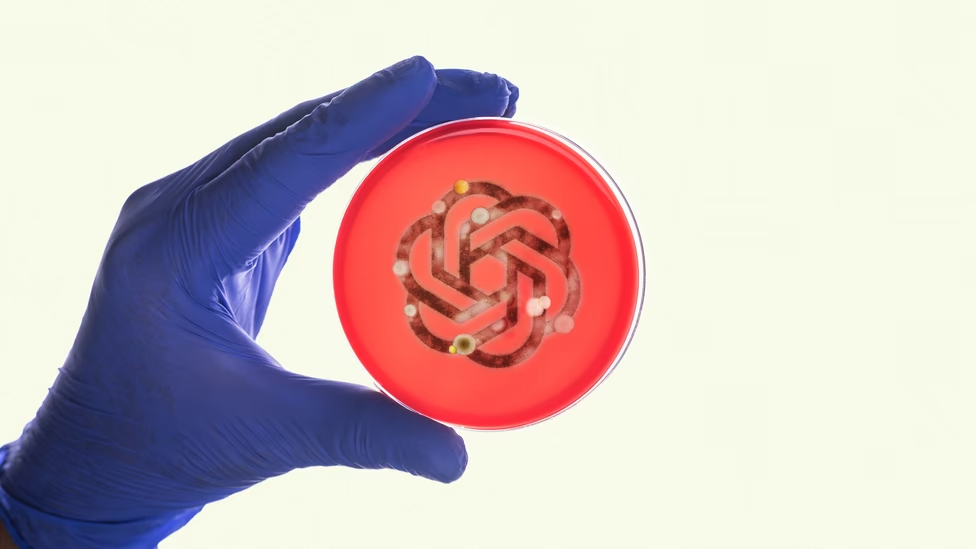

Scientific research has always depended on trust: trust that experiments were conducted honestly, that data reflects reality, and that peer review acts as a meaningful filter. Today, that trust is under strain—not from fraud alone, but from something subtler and far more scalable.

Artificial intelligence is flooding science with low-quality, misleading, and sometimes meaningless research—what many researchers now call “AI slop.”

This is not just an inconvenience. It is a systemic threat to how scientific knowledge is produced, evaluated, and shared.

What “AI Slop” Means in Scientific Publishing

AI slop refers to scientific papers, abstracts, reviews, and datasets that are:

- Automatically generated or heavily assisted by AI

- Superficially plausible but conceptually shallow

- Statistically weak, incoherent, or redundant

- Difficult to detect at scale

- Published faster than humans can meaningfully evaluate

These papers may not be intentionally fraudulent—but they pollute the scientific record.

Why Science Is Especially Vulnerable

Modern science already faces structural pressures:

- Publish-or-perish incentives

- Overloaded peer reviewers

- Explosion of journals and preprint servers

- Emphasis on quantity over quality

AI accelerates all of these pressures.

A single researcher—or paper mill—can now generate hundreds of manuscripts that look legitimate, overwhelming editorial systems built for a slower era.

How AI Slop Gets Through Peer Review

Peer review relies on:

- Limited volunteer time

- Trust in author intent

- Surface-level plausibility checks

AI-generated text exploits these weaknesses by:

- Mimicking scientific language convincingly

- Repeating common structures and citations

- Avoiding obvious errors while lacking insight

Reviewers often don’t have the time—or access to raw data—to detect subtle nonsense.

The Hidden Costs of AI Slop

Wasted Time and Resources

Researchers spend hours sorting signal from noise.

Citation Contamination

Low-quality papers get cited, spreading errors through the literature.

Erosion of Trust

When readers can’t trust journals, science loses legitimacy.

Harm to Real Research

Important findings get buried under volume.

Why Detection Alone Won’t Solve the Problem

Many institutions respond by trying to:

- Detect AI-generated text

- Ban AI use outright

- Add disclosure requirements

These measures help—but they don’t address the root cause.

The real problem is incentives.

If researchers are rewarded for speed and volume, AI will always be used to maximize both.

What the AI Slop Debate Often Misses

AI Is Not the Villain

The same tools can help summarize literature, check statistics, and improve clarity when used responsibly.

The Publishing System Is Already Broken

AI didn’t create the crisis—it exposed it.

Open Science Faces a Paradox

Open platforms accelerate discovery—but also accelerate noise.

Early-Career Researchers Are at Risk

They face pressure to compete in an inflated publication economy.

What a Healthier Scientific System Might Look Like

Addressing AI slop requires structural change:

Reward Quality Over Quantity

Fewer papers, evaluated more deeply.

Stronger Editorial Gatekeeping

Including statistical review and data validation.

AI as a Tool for Review, Not Just Writing

Using AI to flag anomalies, duplication, and weak methodology.

Cultural Shift

Valuing replication, null results, and slow science.

The Role of Journals and Institutions

Publishers and universities must:

- Update authorship and disclosure standards

- Redesign peer review workflows

- Support reviewers with tools and compensation

- Reduce reliance on publication counts for career advancement

Without institutional reform, AI slop will continue to scale faster than oversight.

Why This Matters Beyond Academia

Scientific research underpins:

- Medicine and public health

- Climate policy

- Technology development

- Economic decision-making

If scientific literature becomes unreliable, the consequences extend far beyond journals.

Frequently Asked Questions

What exactly is “AI slop” in science?

Low-quality, AI-generated or AI-assisted research that adds noise rather than knowledge.

Is AI-generated research always bad?

No. AI can assist responsibly, but misuse at scale is the problem.

Can journals detect AI slop reliably?

Not consistently. Detection tools lag behind generation tools.

Is this the same as scientific fraud?

Not always. Many cases involve low standards rather than intentional deception.

Will banning AI fix the issue?

No. Incentive structures matter more than tool restrictions.

What’s the biggest risk if nothing changes?

Loss of trust in scientific knowledge itself.

The Bottom Line

Science is not drowning because AI exists.

It is drowning because a fragile publishing system met a tool that amplifies speed, volume, and superficial credibility.

AI slop forces an uncomfortable reckoning: how much of modern science was already optimized for output over insight.

The solution isn’t to reject AI—but to rebuild scientific culture around rigor, responsibility, and restraint.

If science can adapt, AI may yet strengthen discovery.

If it cannot, the noise will keep rising—until truth itself becomes harder to hear.

Sources The Atlantic