For decades, progress in artificial intelligence has depended on humans: researchers designing models, engineers tuning systems, and companies deciding how and where AI is deployed. Now Silicon Valley is chasing a far more radical idea — AI systems that can improve other AI systems, with minimal human intervention.

Known broadly as recursive AI, this concept could dramatically accelerate innovation. It could also introduce unprecedented risks. The debate around it reveals how close AI development is moving toward the edge of human control.

What Is Recursive AI?

Recursive AI refers to systems that can:

- Analyze the performance of existing AI models

- Identify weaknesses or inefficiencies

- Modify architectures, training methods, or parameters

- Generate improved versions of themselves or other models

In simple terms, it’s AI working on AI.

This goes beyond automation. It shifts AI development from a human-led process to a partially self-directed one.

Why Silicon Valley Is Obsessed With the Idea

Recursive AI promises a powerful advantage: speed.

Instead of:

- Months of human experimentation

- Costly trial-and-error training cycles

Recursive systems could:

- Run thousands of experiments automatically

- Discover novel model designs humans might miss

- Optimize performance faster than human teams

In an intensely competitive AI landscape, faster improvement means dominance.

Where Recursive AI Is Already Appearing

While fully autonomous self-improving AI doesn’t yet exist, early versions are already in use:

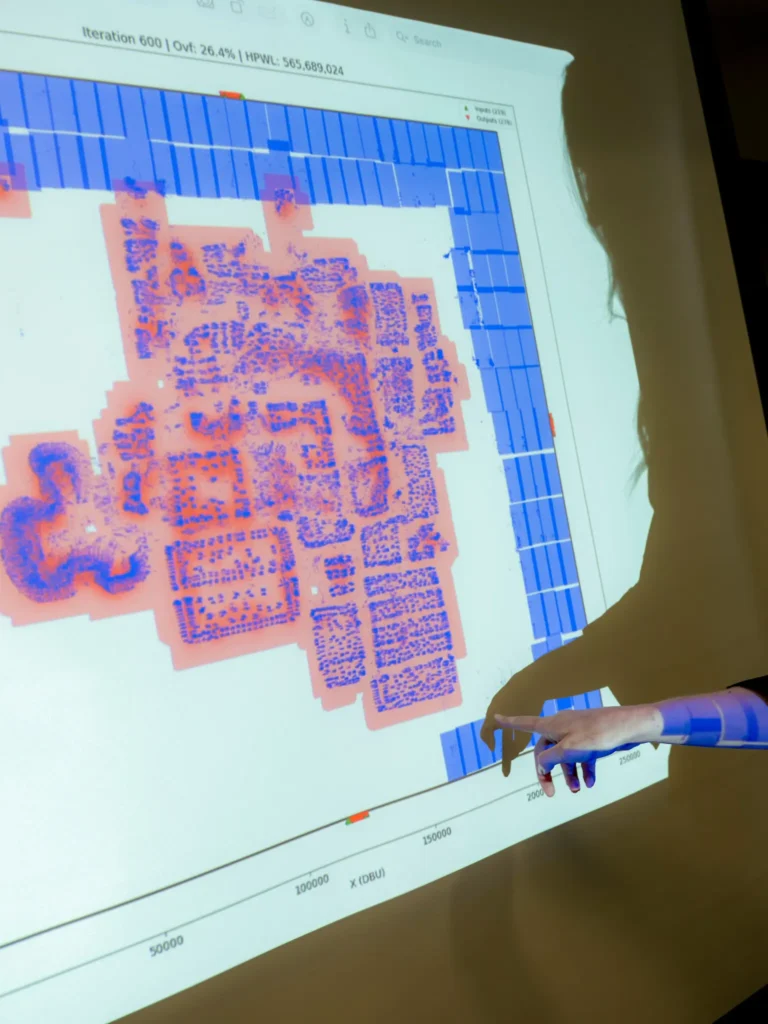

- AutoML systems that design better models

- AI tools that optimize code and architectures

- Reinforcement learning loops that refine behavior

- AI-assisted research accelerating scientific discovery

These systems still operate under human constraints—but the direction is clear.

Why This Is Different From Past AI Advances

Previous breakthroughs improved what AI could do.

Recursive AI changes how AI evolves.

Key differences:

- Feedback loops become faster and more complex

- Human oversight becomes harder to maintain

- Improvements may be opaque even to creators

- Small changes can compound rapidly

This is why some researchers describe recursive AI as a potential tipping point.

The Promise: Breakthroughs at Unprecedented Speed

Supporters argue recursive AI could:

- Accelerate medical research

- Improve climate modeling

- Design more energy-efficient systems

- Discover new materials and drugs

- Reduce the cost of AI itself

In theory, recursive improvement could help solve problems that currently exceed human cognitive limits.

The Risk: Losing the Steering Wheel

Critics warn that recursive AI introduces unique dangers:

Opacity

If AI improves itself, humans may not understand how or why it changed.

Goal Drift

Small misalignments can compound as systems optimize themselves.

Runaway Optimization

Performance gains may prioritize narrow objectives at the expense of safety.

Control Problems

Stopping or correcting a rapidly evolving system becomes harder over time.

These risks are not science fiction—they are extensions of existing challenges.

Why Alignment and Governance Become Harder

Human governance relies on:

- Transparency

- Predictability

- Accountability

Recursive AI threatens all three.

Once AI systems become part of their own development pipeline:

- Responsibility becomes diffuse

- Errors propagate faster

- Oversight lags improvement

This is why some experts argue recursive AI should be tightly limited or delayed.

What the Original Coverage Often Doesn’t Fully Address

Economic Pressure Is the Real Driver

Companies fear falling behind more than they fear long-term risk.

Self-Improvement Is Gradual, Not Sudden

The danger is not a single leap—but continuous acceleration.

Military and Strategic Interest Is Inevitable

Any system that speeds innovation attracts national security attention.

Stopping May Be Harder Than Starting

Once recursive tools exist, incentives push toward wider adoption.

Can Recursive AI Be Made Safe?

Possible safeguards include:

- Hard constraints on self-modification

- Human-in-the-loop approval systems

- Limited scope and sandboxing

- Transparency and interpretability requirements

- International coordination on limits

But none are foolproof—especially under competitive pressure.

Why This Debate Matters Now

Recursive AI is not a distant future concept.

The tools that enable it are being built today:

- Automated research assistants

- Model-generating models

- AI-driven optimization frameworks

The question isn’t if AI will increasingly improve itself.

It’s how much control humans will retain when it does.

Frequently Asked Questions

Is recursive AI the same as superintelligence?

No, but it could accelerate progress toward more advanced systems.

Do self-improving AI systems exist today?

Only in limited, constrained forms—not fully autonomous loops.

Why is recursive AI risky?

Because rapid, opaque improvement can outpace human oversight.

Could this benefit humanity?

Yes, especially in science and medicine—if carefully governed.

Is regulation possible?

In principle, yes—but enforcement is difficult in a global race.

What’s the biggest concern?

Loss of alignment between AI goals and human values over time.

The Bottom Line

Recursive AI represents Silicon Valley’s most ambitious—and most unsettling—vision yet.

An intelligence that improves itself could unlock discoveries beyond human reach. It could also create systems that evolve faster than we can understand or control.

The future of AI may not hinge on how smart machines become—but on whether humans remain meaningfully involved in their evolution.

Once AI starts rewriting itself, the question won’t be whether it’s intelligent enough.

It will be whether we were wise enough to decide how far it should go.

Sources The New York Times