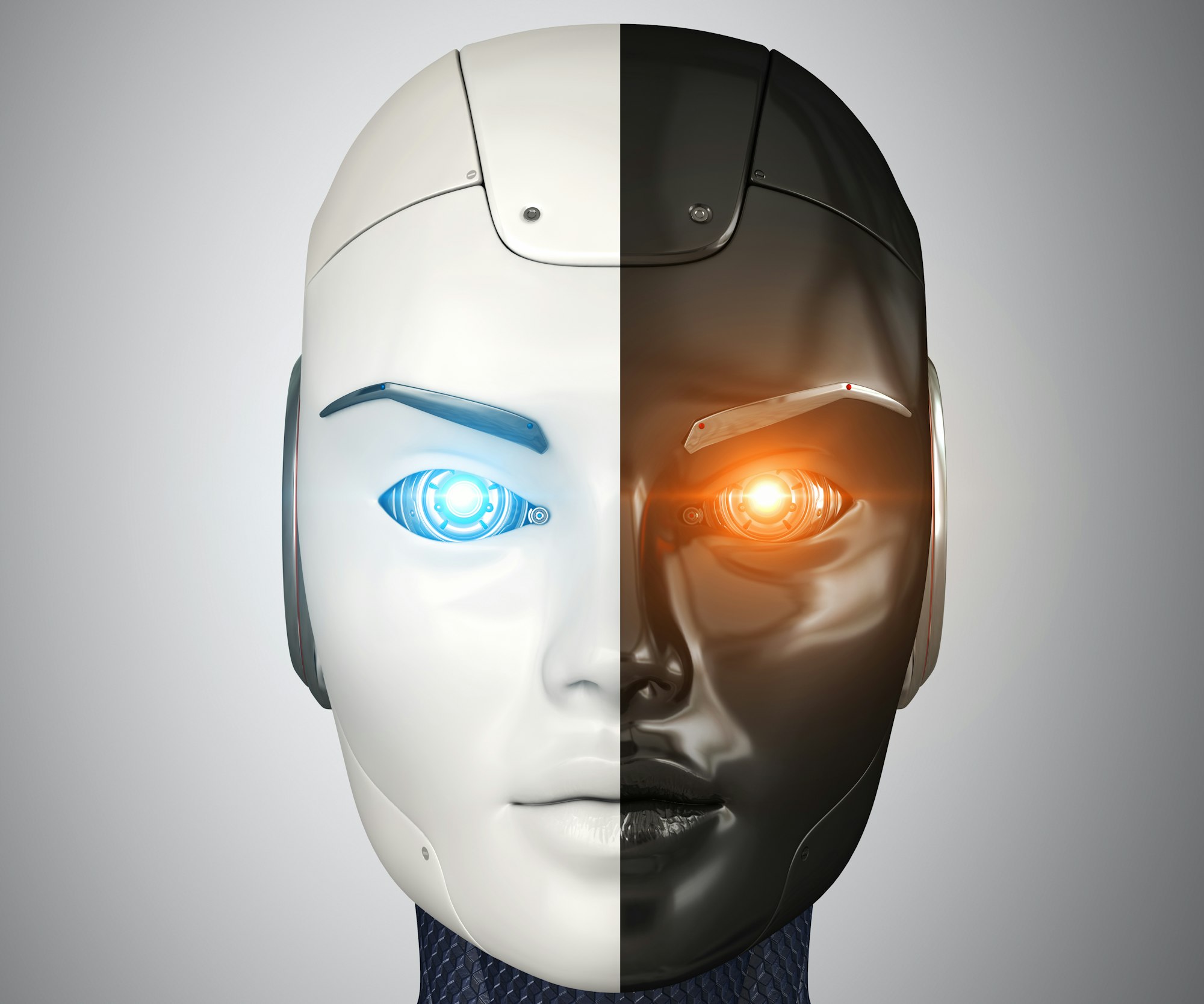

The Double-Edged Sword of AI in Biosecurity

Artificial intelligence (AI) is making impressive advances in fields such as medicine and agriculture, offering new ways to accelerate drug discovery, increase crop yields, and engineer novel biological materials. However, the benefits of AI come with inherent risks. The dual-use nature of AI in biosecurity—where technologies intended for beneficial purposes can also be repurposed for harmful ones—demands careful management and regulation.

The Emerging Threats of AI in Biosecurity

Consider AI systems that process vast amounts of biological data. These can be engineered to develop harmful biological agents. For instance, an AI designed to construct a virus for therapeutic use could also be manipulated to create a pathogen that circumvents vaccines. This capability could potentially unleash new diseases that trigger widespread health crises. The versatility of AI technologies makes them especially vulnerable to misuse, underscoring the need for stringent controls.

The Limits of Good Intentions

While AI developers have pledged to mitigate risks and promote safety, voluntary commitments alone are inadequate. Without binding regulations, there is a danger that these powerful tools could be used irresponsibly or maliciously, leading to catastrophic consequences.

The Essential Role of Government Oversight

The shortcomings of voluntary measures highlight the urgent need for governmental intervention in regulating AI applications in biosecurity. Legislation is necessary to ensure comprehensive vetting of AI technologies before they are deployed, particularly to assess their potential in crafting dangerous biological agents. Mandatory safety protocols must also be established to safeguard these technologies from unauthorized access.

Balancing Progress and Protection

Fostering Innovation While Ensuring Safety

Policymakers are tasked with the delicate balance of promoting innovation while safeguarding public health and safety. Overregulation could hinder technological advancement, whereas insufficient oversight poses significant risks. An ideal approach would involve crafting a regulatory framework that supports safe research and application of AI in biosecurity while encouraging continuous scientific exploration.

Collaborating Across Borders

Biosecurity is a global concern that necessitates international collaboration. With varying capabilities and resources, countries must come together to formulate shared standards and practices for AI usage in biosecurity. This collaborative effort would facilitate the exchange of best practices and foster a unified approach to managing AI-related risks.

Prioritizing Transparency and Public Involvement

To build public trust in AI applications within biosecurity, transparency and engagement are crucial. Governments and developers should actively communicate with the public, clarifying the risks and benefits associated with AI technologies. It is vital that the development and deployment of AI in this critical area align with societal values and expectations.

Explore the transformative impact and inherent challenges of AI in biosecurity, emphasizing the importance of robust governance, international cooperation, and the delicate balance between innovation and safety.

FAQs for “Navigating the New Frontier: AI’s Role in Biosecurity”

1. What is the dual-use nature of AI in biosecurity?

The dual-use nature of AI in biosecurity refers to the fact that AI technologies, which are designed for beneficial purposes like advancing medicine and agriculture, can also be misused to create harmful biological agents. For example, AI that helps in developing new drugs could be repurposed to engineer dangerous viruses, posing significant security risks.

2. Why are voluntary commitments from AI developers not enough to ensure safety in biosecurity?

While voluntary commitments from AI developers are important for promoting responsibility, they are not sufficient because they lack enforceability. Without strict regulations and oversight, there is a risk that AI technologies could be used irresponsibly or maliciously, leading to severe consequences. Government intervention and mandatory safety protocols are necessary to ensure these technologies are used safely.

3. How can we balance innovation with safety in the use of AI for biosecurity?

Balancing innovation with safety involves creating a regulatory framework that encourages the development of AI technologies while ensuring they are used responsibly. This includes implementing safety checks, international collaboration for standardized practices, and engaging the public to build trust and align the use of AI with societal values. The goal is to foster innovation without compromising public health and security.

Sources Science