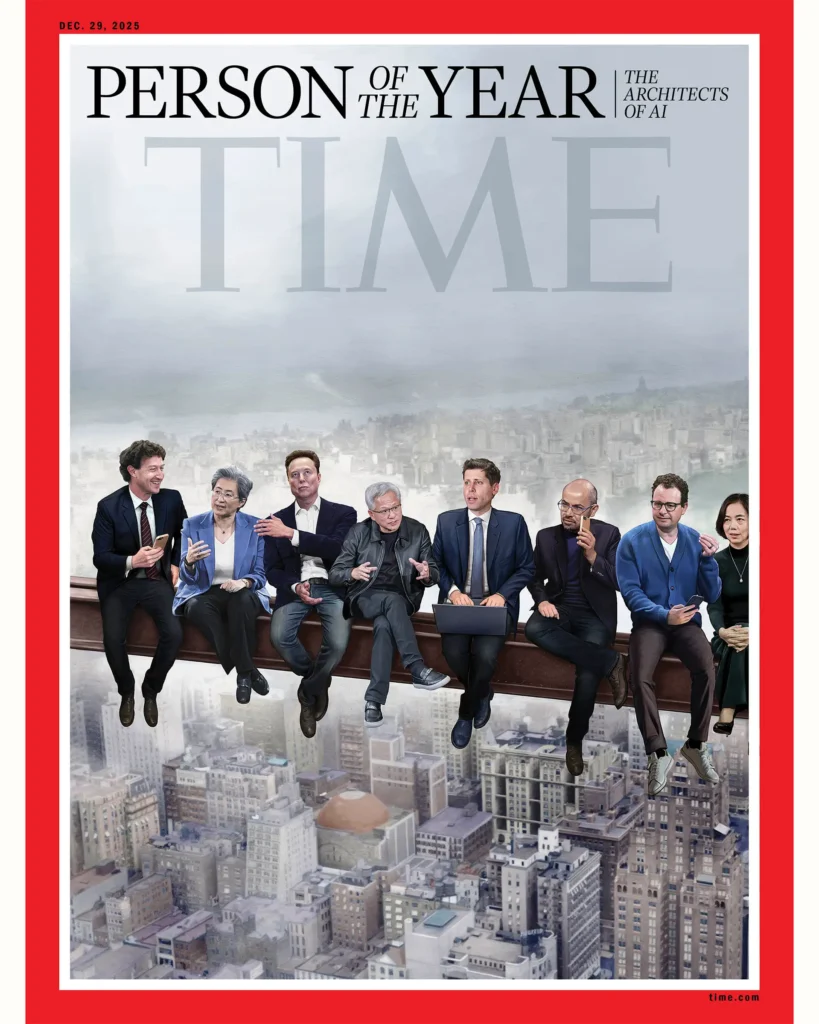

For the first time in its history, TIME didn’t crown a single individual as Person of the Year. Instead, it honored a group: the AI Architects — the engineers, researchers, executives, and visionaries building the systems that now influence how billions of people work, learn, create, and communicate.

This choice wasn’t symbolic. It was inevitable.

Artificial intelligence is no longer a future technology. It is infrastructure. And the people designing that infrastructure now hold a level of influence once reserved for heads of state, industrial magnates, and revolutionary thinkers.

But who exactly are these AI Architects?

And what does their rise mean for the rest of us?

Who Are the “AI Architects”?

They are not just coders.

They include:

- founders of frontier AI labs

- chief scientists and research leads

- systems engineers building global-scale compute

- product leaders shaping how AI reaches the public

- policy influencers advising governments

- safety researchers setting boundaries

- corporate executives steering trillion-dollar bets

Some are household names.

Most are not.

Yet together, they decide:

- what AI can and cannot do

- whose data is used

- how fast systems are released

- where guardrails are placed

- who gets access — and who doesn’t

This collective power is unprecedented.

Why TIME Chose a Group, Not a Person

AI is not the product of one genius.

It’s the result of large teams, massive capital, and global coordination.

Unlike past innovations:

- no single inventor controls AI

- no single nation owns it

- no single company fully understands its limits

Honoring “the AI Architects” reflects a new reality: systemic power now matters more than individual brilliance.

What the Original Coverage Didn’t Fully Explore

TIME’s article focused on impact.

But the deeper story is about concentration, responsibility, and risk.

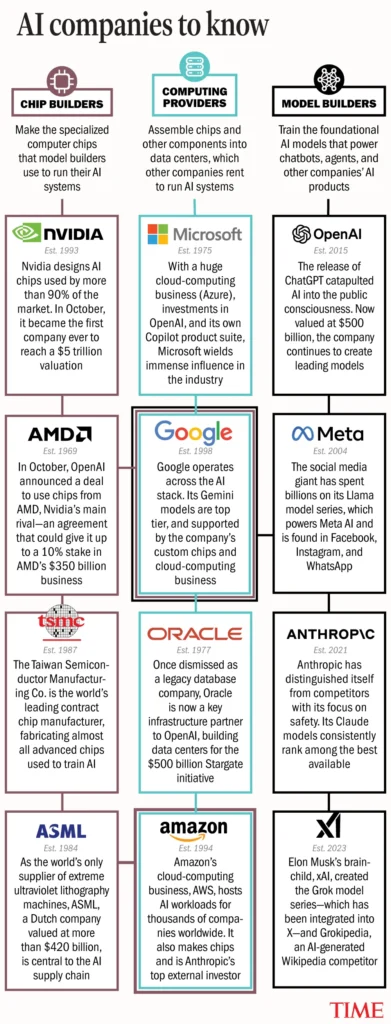

1. AI Power Is Highly Concentrated

Despite global adoption, real control sits with a small number of organizations that:

- own the most advanced models

- control access to compute

- set deployment timelines

- decide safety thresholds

A few hundred people influence systems used by billions.

That asymmetry is historically rare.

2. The Architects Are Also Gatekeepers

AI Architects don’t just build tools. They decide:

- what speech is allowed

- what information is surfaced

- what content is filtered

- how bias is handled

- when systems refuse

These decisions shape culture, politics, and economics — often without democratic oversight.

3. Economic Power Is Shifting Fast

AI Architects are accelerating:

- automation of white-collar work

- restructuring of creative industries

- productivity gaps between firms

- wealth concentration around AI capital

Entire professions are being reshaped by design choices made in labs and boardrooms.

4. Safety and Speed Are in Constant Conflict

The same people pushing rapid innovation are also tasked with preventing harm.

This creates tension between:

- releasing powerful tools

- managing misuse risks

- responding to competition

- satisfying investors

- protecting the public

Mistakes scale instantly.

5. Governments Are Playing Catch-Up

While AI Architects move in months, governments move in years.

Regulation lags behind:

- autonomous decision-making

- AI-generated misinformation

- cyber risks

- labor displacement

- model accountability

This leaves unelected technologists filling a power vacuum.

The Moral Burden of Building Intelligence

Past engineers built machines that moved faster, stronger, or farther.

AI Architects are building systems that:

- reason

- persuade

- predict

- decide

- create

That makes their responsibility fundamentally different.

The choices they make today will echo for decades.

Why AI Architects Inspire Both Hope and Fear

Hope

- medical breakthroughs

- scientific discovery

- productivity gains

- accessibility tools

- education at scale

Fear

- mass surveillance

- job displacement

- misinformation floods

- concentration of power

- loss of human agency

Both outcomes depend on how AI is designed and governed.

This Is Not a Tech Story — It’s a Civilization Story

TIME’s recognition marks a turning point:

AI is no longer “just technology.”

It is a force shaping civilization itself.

The architects are not villains or heroes by default — but they are now stewards of something larger than any company or product.

What Comes Next

The next decade will determine:

- whether AI is broadly empowering or deeply unequal

- whether safety becomes standard or optional

- whether governance catches up to capability

- whether AI serves humanity — or a narrow elite

The AI Architects have started the story.

The rest of the world must now engage with it.

Frequently Asked Questions

Q1. Why did TIME choose “AI Architects” as Person of the Year?

Because AI’s impact is systemic, and no single individual represents its influence.

Q2. Who qualifies as an AI Architect?

Leaders, researchers, and engineers shaping frontier AI systems and their deployment.

Q3. Is this recognition praise or a warning?

Both. It acknowledges innovation while highlighting responsibility and risk.

Q4. Are AI Architects accountable to the public?

Not directly — which is why governance and transparency are urgent issues.

Q5. Will AI replace human decision-making?

It already influences decisions, but humans still hold final authority — for now.

Q6. Why is AI power so concentrated?

Because compute, data, and talent are expensive and scarce.

Q7. What role do governments play?

Governments are trying to regulate, but innovation is moving faster than policy.

Q8. Should the public be concerned?

Concern is healthy. Engagement and oversight are essential.

Q9. Can AI be designed responsibly?

Yes — but it requires incentives, regulation, and ethical leadership.

Q10. What’s the biggest risk going forward?

Unaccountable power over systems that shape truth, labor, and opportunity.

Sources TIME