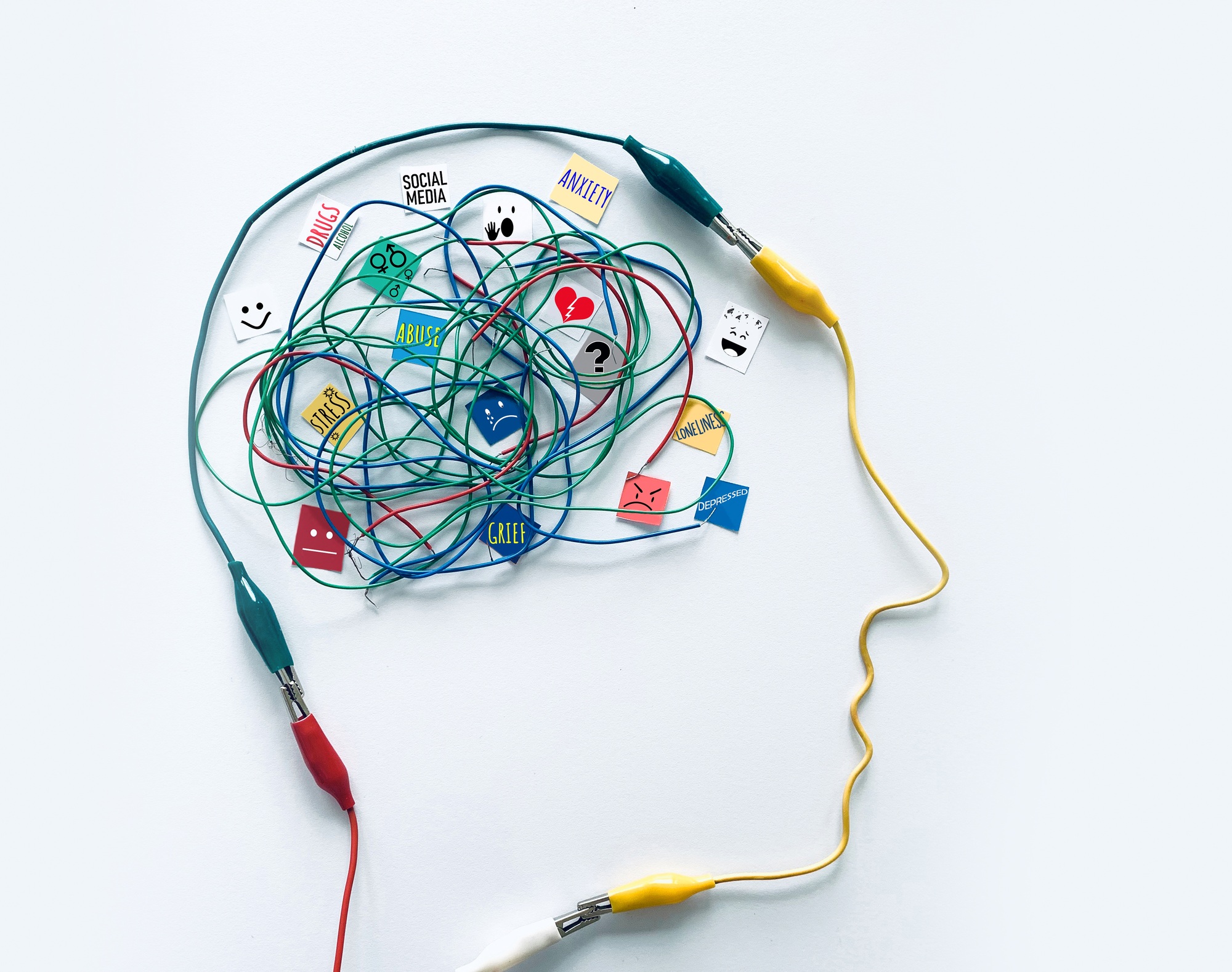

In recent years, advances in artificial intelligence (AI) have rapidly transformed many sectors—including mental health care. AI therapy chatbots, once a futuristic concept, are now emerging as accessible tools for individuals seeking mental health support. While traditional therapy remains invaluable, these digital platforms are carving out a unique space in the mental health landscape by offering immediate, low-cost, and stigma-free access to support. This article explores the evolution of AI therapy chatbots, delves into how they work, discusses their benefits and limitations, and examines the ethical, regulatory, and future implications of this groundbreaking technology.

A Brief Background on AI in Mental Health

The mental health field has long grappled with issues such as accessibility, affordability, and the social stigma surrounding therapy. Enter AI—a technological development that promises to democratize access to mental health resources. Early chatbot models provided simple, rule-based interactions, but today’s AI-powered systems leverage machine learning and natural language processing (NLP) to simulate conversational experiences that can closely mimic human empathy and understanding.

As these systems evolve, they are increasingly integrated into mobile applications and online platforms. They offer immediate support during moments of distress, serve as an initial point of contact, and can even complement traditional therapeutic methods by monitoring user moods and offering insights over time.

How AI Therapy Chatbots Work

At their core, AI therapy chatbots combine sophisticated algorithms with vast amounts of language data to generate empathetic, context-aware responses. Here’s a closer look at their inner workings:

- Natural Language Processing (NLP):

Chatbots analyze user input to understand the sentiment and context of a conversation. Advanced NLP models can detect subtle emotional cues, ensuring responses are not only contextually relevant but also supportive. - Machine Learning:

By continuously learning from interactions, these systems refine their responses over time. This iterative learning process helps improve accuracy, ensuring that the support offered is both timely and increasingly personalized. - Data Integration:

Some platforms integrate data from wearable devices or mood-tracking apps. This integration allows chatbots to offer tailored advice by monitoring physiological and emotional indicators, such as sleep patterns or stress levels. - Pre-Programmed Therapeutic Frameworks:

Many chatbots are designed with therapeutic techniques in mind, drawing from cognitive-behavioral therapy (CBT), mindfulness practices, and other evidence-based approaches. While they do not replace professional diagnoses, they can help users navigate everyday stressors and mild mental health challenges.

Benefits of AI Therapy Chatbots

The adoption of AI in mental health care offers a variety of compelling benefits:

- Increased Accessibility:

For individuals in remote areas or those who are reluctant to seek help due to stigma, AI chatbots offer a private, always-available alternative. They eliminate common barriers such as cost, scheduling conflicts, and geographic limitations. - Cost-Effective Support:

Traditional therapy can be expensive, and insurance coverage is often limited. AI-driven tools can provide continuous support at a fraction of the cost, making mental health care more affordable for a broader population. - Immediate Intervention:

In moments of crisis, waiting for an appointment with a human therapist may not be feasible. Chatbots can offer immediate coping strategies and a listening ear, potentially defusing a situation before it escalates. - Data-Driven Insights:

The digital nature of these interactions allows for the collection of anonymized data over time, which can help identify trends and common stressors. These insights can be invaluable for tailoring future mental health initiatives and understanding population-wide mental health trends.

Limitations and Challenges

Despite their potential, AI therapy chatbots are not without significant challenges:

- Lack of Deep Empathy:

While AI can simulate empathy to a certain extent, it lacks the genuine human connection that many users find crucial for healing. The subtle emotional nuances that a human therapist picks up on may be missed by an algorithm. - Risk of Misinterpretation:

The complexity of human emotion can sometimes lead to misunderstandings. AI systems might misinterpret a user’s tone or context, leading to responses that are not entirely appropriate for the situation. - Handling Crisis Situations:

AI chatbots are not equipped to deal with severe mental health crises or emergency situations. There is ongoing debate about the protocols that should be in place when a user expresses suicidal ideation or severe distress. - Algorithmic Bias and Limitations:

Like all AI systems, therapy chatbots are only as good as the data they are trained on. Inadequate or biased training data can lead to responses that are less effective—or even inadvertently harmful—for certain groups of people. - Privacy and Data Security:

Sensitive personal data is involved in mental health conversations. Ensuring that this data is securely stored and handled in compliance with data protection regulations remains a critical challenge.

Ethical and Regulatory Considerations

The rise of AI therapy chatbots brings with it a host of ethical questions and regulatory challenges:

- Informed Consent and Transparency:

Users must be fully aware that they are interacting with an AI, not a licensed human therapist. Clear communication about the capabilities and limitations of the service is essential. - Data Privacy:

Regulations such as the Health Insurance Portability and Accountability Act (HIPAA) in the United States, and similar data protection laws worldwide, set stringent guidelines for handling sensitive information. Developers and providers of AI therapy platforms must adhere to these regulations, ensuring robust data encryption and secure storage practices. - Liability and Accountability:

When an AI system provides inadequate or harmful advice, questions of liability arise. Establishing clear protocols and regulatory frameworks to address these concerns is imperative for the ethical deployment of AI in mental health care. - Balancing Innovation and Safety:

Regulators are faced with the challenge of encouraging technological innovation while ensuring that patient safety and ethical standards are maintained. This often involves close collaboration between technologists, mental health professionals, and policy-makers.

Future Directions and Innovations

Looking ahead, the landscape of AI therapy is likely to evolve in several promising directions:

- Hybrid Models:

The most effective mental health care may come from integrating AI support with human therapy. Hybrid models, where AI systems provide routine check-ins and preliminary assessments, while human therapists handle more complex issues, could offer the best of both worlds. - Enhanced Personalization:

As AI systems continue to gather data, they can offer increasingly personalized therapeutic interventions. Future innovations might include adaptive algorithms that modify their approach based on a user’s progress and changing mental health needs. - Integration with Telemedicine:

The growth of telemedicine has already transformed healthcare delivery. AI therapy chatbots could be seamlessly integrated into telemedicine platforms, providing a continuous care loop that supports patients between traditional therapy sessions. - Advanced Emotional Intelligence:

Research is ongoing into improving the emotional intelligence of AI systems. Enhanced capabilities in recognizing and responding to emotional cues—potentially even through voice analysis or facial recognition in video interactions—could lead to more nuanced and supportive interactions. - Broader Applications:

Beyond individual therapy, AI-driven mental health tools might be used in corporate wellness programs, schools, and community centers, expanding their impact on public mental health.

Conclusion

AI therapy chatbots represent a groundbreaking development in mental health care, offering both innovative solutions and complex challenges. They provide a unique opportunity to expand access to mental health support, reduce costs, and offer immediate assistance. However, they are not a replacement for human empathy and expertise. Balancing the benefits with ethical considerations, data privacy, and the need for human oversight will be key as this technology continues to evolve. As society navigates this new frontier, collaboration between technologists, mental health professionals, and regulators will be essential to ensure that the promise of AI in mental health is realized safely and effectively.

Frequently Asked Questions (FAQs)

Q1: What exactly is an AI therapy chatbot?

A: An AI therapy chatbot is a digital tool that uses artificial intelligence—particularly natural language processing and machine learning—to simulate therapeutic conversations. It offers mental health support, coping strategies, and emotional assistance through text-based interactions.

Q2: How effective are AI therapy chatbots compared to traditional therapy?

A: While AI chatbots can provide immediate support and are particularly useful for mild to moderate mental health issues, they are generally not a substitute for traditional therapy. Human therapists bring a depth of empathy, personalized insight, and clinical expertise that AI currently cannot match.

Q3: Are there risks associated with using AI therapy chatbots?

A: Yes. Risks include potential misinterpretation of user input, limited ability to handle severe crises, concerns about data privacy, and the possibility of algorithmic bias. It is crucial for users to understand these limitations and to seek professional help when necessary.

Q4: How do AI chatbots ensure my privacy and data security?

A: Reputable AI therapy platforms implement robust encryption, adhere to strict data protection regulations (like HIPAA in the U.S.), and maintain transparent privacy policies. However, users should always review these policies and remain informed about how their data is managed.

Q5: Can AI therapy chatbots replace human therapists?

A: Not entirely. AI chatbots are best viewed as complementary tools. They can provide initial support and routine check-ins, but complex or severe mental health issues generally require the nuanced understanding and personalized care that only human therapists can offer.

Q6: What future developments can we expect in AI mental health care?

A: Future advancements may include hybrid care models that combine AI and human therapy, enhanced personalization through better data integration, improved emotional intelligence capabilities, and broader integration with telemedicine and wearable health technologies.

Q7: Who is responsible for regulating AI therapy chatbots?

A: Regulation typically involves multiple stakeholders, including governmental bodies, healthcare regulators, and industry groups. Ongoing collaboration is essential to ensure these systems adhere to ethical standards and protect user safety.

As AI technology continues to evolve, so too will its applications in mental health care. Whether you are seeking immediate support or a supplementary tool for your wellness journey, understanding both the promise and the pitfalls of AI therapy chatbots can help you make informed choices about your mental health care.

Sources The New York Times