AI is exploding across the world — powering apps, writing emails, generating images, and reshaping industries. But here’s the twist: some of the people building and evaluating these systems are telling their friends and family to avoid it.

These aren’t casual users.

They’re the workers who test AI, correct it, clean its data, and moderate its output.

And many of them say the technology is far less reliable — and far more fragile — than the public realizes.

This is the story of the people behind the curtain, and why they’re sounding the alarm.

👀 The Workers Who See What We Don’t

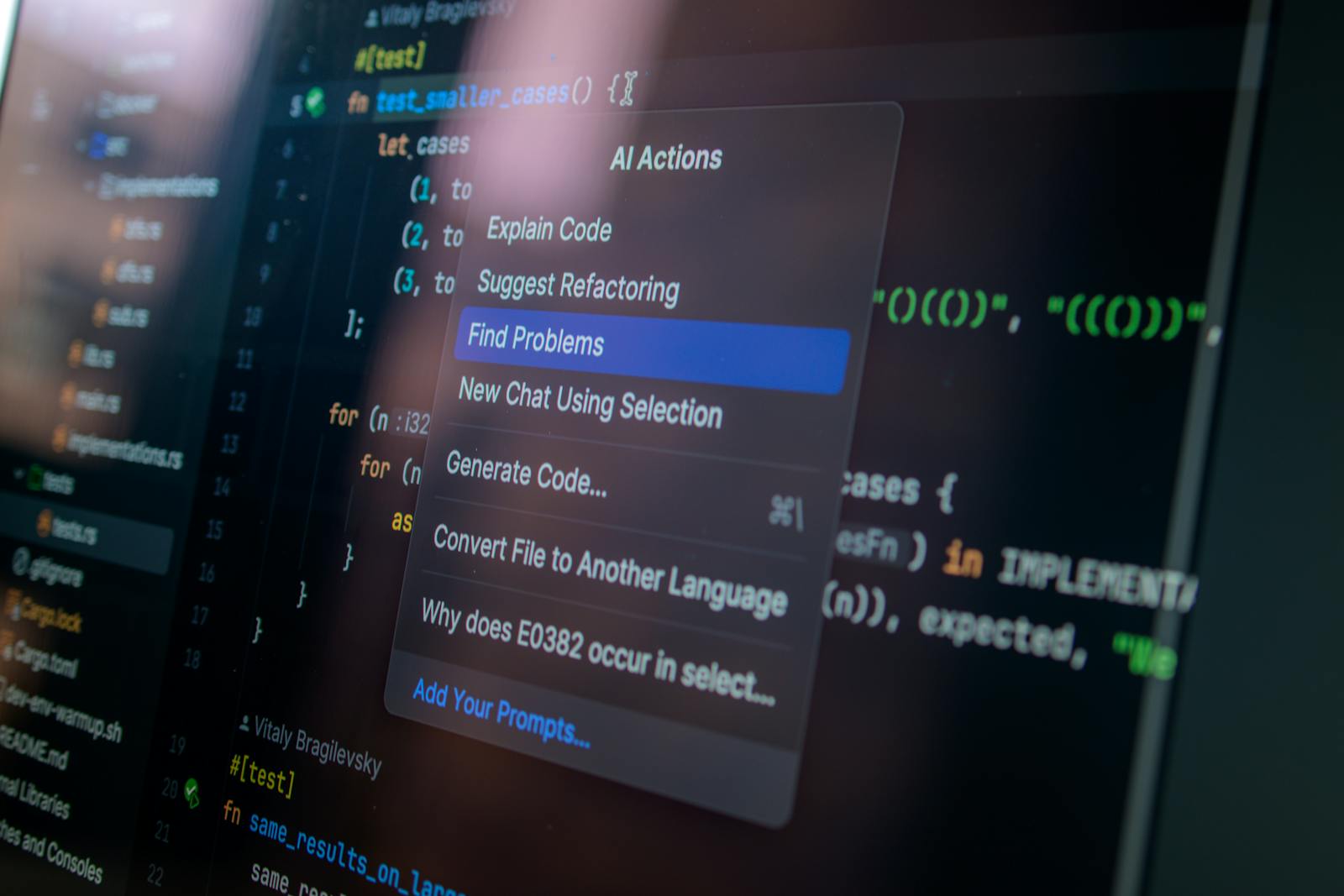

These AI raters and content evaluators handle tasks like:

- Flagging hallucinated or incorrect answers

- Rating harmful or biased outputs

- Reviewing disturbing AI-generated content

- Testing the limits and failures of models

- Moderating toxic or graphic material

Their job is not to celebrate AI.

Their job is to find every flaw — and there are many.

Some say they’ve witnessed so many errors, biases, and “nonsense outputs” that they now refuse to use generative AI themselves. Others have banned it at home.

One worker summed it up plainly:

“After seeing the data going in, I knew I couldn’t trust what comes out.”

💥 Why These Insiders Don’t Trust AI

1. Bad Data → Bad Output

AI models are only as good as the data they’re trained on. Workers report:

- incomplete datasets

- biased or inconsistent labeling

- rushed or sloppy inputs

- errors that never get fixed

Yet the AI appears confident — even when wrong.

2. Speed Over Safety

Workers say companies push updates quickly, sometimes skipping proper testing.

Innovation is racing ahead of quality control.

3. The Emotional Toll

Moderators review disturbing or graphic content that AI produces or tries to filter — leading to stress, burnout, and distrust.

4. Sensitive Topics Go Wrong, Fast

Workers have seen AI produce:

- incorrect medical guidance

- harmful advice

- racist or offensive outputs

- misinformation presented as fact

After seeing these failures up close, many don’t want their kids anywhere near the tools.

🔍 What We Don’t See as AI Users

The original reporting touched on worker skepticism, but here’s the deeper picture:

The Hidden Labor Behind AI

Tens of thousands of global contractors quietly power AI systems.

They:

- label data

- review toxic content

- rate AI-generated answers

- provide feedback loops

This workforce is essential — yet mostly invisible.

Poor Working Conditions

Many raters work under:

- low pay

- intense deadlines

- repeated exposure to disturbing content

- limited mental-health support

- nondisclosure agreements

AI’s “magic” relies on very human costs.

Global Inequality

Much of this labor is outsourced to workers in lower-income regions who lack protections or recourse.

Public Trust at Risk

If insiders don’t trust the systems, what does that mean for everyone else?

🧭 What This Means for the Future

- Trust needs rebuilding: The AI industry must address both worker conditions and model reliability.

- Users need digital literacy: Blind trust in AI can mislead people if they don’t question its answers.

- Regulation will tighten: Governments may introduce rules around transparency, data sourcing, and labor standards.

- AI success depends on human safety: A system built on hidden burnout and mistrust isn’t sustainable.

❓ FAQs

Q1: Are AI workers anti-AI?

Not exactly. Most believe in the technology — but they’ve seen enough flaws to urge caution.

Q2: Is AI unsafe to use?

It can be helpful, but users must verify outputs and avoid trusting it blindly.

Q3: What should everyday users do?

- Double-check important answers

- Avoid using AI for medical, legal, or financial decisions

- Be mindful of what personal data you share

Q4: Why is the behind-the-scenes labor important?

Because AI models depend heavily on human work — if that labor is rushed or poorly supported, AI quality suffers.

Q5: Will AI get better?

Yes — but only if companies invest in safer data practices, better oversight, and improved working conditions.

Q6: Should people stop using AI altogether?

No. The point is to use it wisely, thoughtfully, and with realistic expectations.

✅ Final Thought

AI feels magical. But behind the curtain are humans seeing its flaws every day — and they’re urging us to slow down, stay aware, and keep our eyes open.

If the people who build the tools say “be careful,” we should listen.

Sources The Guardian