Silicon Valley has entered a new phase in the AI arms race. Instead of just training models on public datasets or controlled benchmarks, tech companies are now building full-scale replicas of real-world platforms — including Amazon-style shopping sites, Gmail-like inboxes, banking dashboards, and productivity suites — all designed for one purpose:

➡️ to train autonomous AI agents to navigate the digital world the same way humans do.

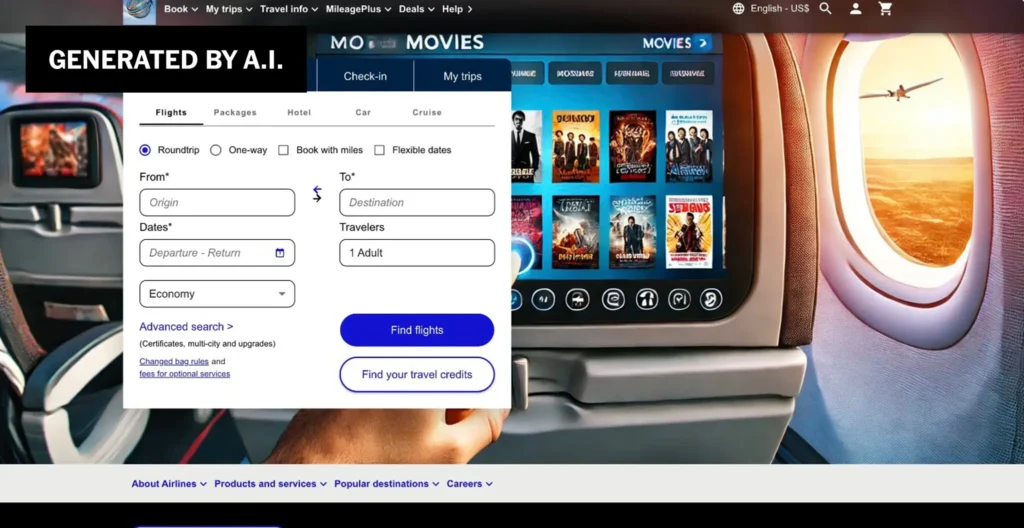

These copycat environments function like digital playgrounds or simulation labs where AI agents can browse, click, shop, reply to emails, make decisions, troubleshoot errors, and perform tasks that mirror real human workflows.

Why? Because the next frontier of artificial intelligence is not conversation — it’s action.

LLMs that chat are useful.

LLMs that do things are revolutionary.

This shift marks a profound moment: companies are preparing AI agents to operate computers, perform entire job functions, and eventually automate complex tasks across industries.

🧪 Why AI Companies Are Creating Fake Amazons, Fake Gmails, and Fake Banking Portals

Modern AI models need more than text to become “autonomous workers.” They need:

- realistic interfaces

- interactive environments

- multi-step tasks

- simulated consequences

- practical problem-solving challenges

Real-world platforms like Amazon and Gmail are too risky for training:

- Agents might buy items accidentally

- They could trigger fraud systems

- They could access private data

- Terms of service prohibit automated testing

- Mistakes could cause financial losses

So developers are building hyper-realistic clones — safe sandboxes where AI can learn to:

- send emails

- process returns

- navigate menus

- fill out forms

- handle errors

- perform shopping tasks

- execute customer service workflows

These simulations are the AI equivalent of flight simulators for pilots.

They allow AI agents to gain experience without damaging real systems.

🤖 The Goal: AI Agents That Perform Real Jobs

The AI industry is shifting from “chatbots that answer questions” to agents that complete work.

These agents could eventually manage:

1. Administrative Tasks

- email sorting

- scheduling

- form submissions

- data entry

2. Customer Support

- refund processing

- email responses

- issue escalation

3. E-commerce Operations

- inventory updates

- product listing management

- order tracking

4. Finance & HR Tasks

- payroll processing

- invoice management

- onboarding workflows

5. Software Operations

- bug reproduction

- system monitoring

- automated deployments

Training in simulated Amazon or Gmail environments gives agents the skills to operate real SaaS systems once deployed.

🧠 Why Simulation Is Critical for Training AI Agents

AI agents must learn to:

🧭 Navigate complex interfaces

Real platforms contain:

- pop-ups

- dynamic menus

- ads

- errors

- update notifications

Simulations replicate these conditions.

⛓️ Handle multi-step tasks

Real work involves sequences, not single prompts:

- open inbox → locate message → draft response → attach file → send

Agents need hands-on practice.

🔍 Learn to troubleshoot mistakes

Simulated environments let AI:

- try

- fail

- correct itself

- learn from missteps

without harming real systems.

⚙️ Understand tool use

For AI to be useful in the workplace, it must use:

- browsers

- apps

- dashboards

- APIs

- internal tools

Simulation gives it that exposure.

🚧 The Hidden Challenges Behind Training Agentic AI

What’s rarely discussed publicly:

1. Data Privacy Issues

Big companies can’t legally let AI agents interact with real user data.

2. Behavioral Alignment

AI agents need:

- boundaries

- rules

- ethical constraints

- safety guardrails

Otherwise, agents could act unpredictably.

3. Real-to-Sim Gap

Agents that succeed in simulations may fail in real-world environments where interfaces change frequently.

4. Infrastructure Costs

Running thousands of agent simulations requires massive compute power — and energy.

5. Security Risks

An agent trained to perform actions could:

- unintentionally exploit vulnerabilities

- escalate privileges

- access unauthorized systems

Safety remains a major concern.

🏢 The Corporate Strategy Behind Agent Simulation Labs

Big Tech Wants Enterprise Dominance

Microsoft, Google, Amazon, and Meta see agent ecosystems as the next $1 trillion market.

Owning the simulation infrastructure means:

- attracting businesses

- training industry-specific agents

- controlling AI workflows

- capturing enterprise spend

Startups Are Moving Faster

Companies like:

- Adept

- Cognition

- Rabbit

- Fixie

- Devin-inspired agent platforms

…are racing to become the “operating system for AI workers.”

Investors Are Betting Big

VCs see autonomous agents as the next cloud boom:

- lower labor costs

- continuous operation

- scalable digital workforces

The talent war is intense.

🌍 The Broader Impact: How AI Agents Could Reshape Work

Agentic AI represents the most significant workplace transformation since the personal computer.

For Employers

- higher productivity

- reduced labor expenses

- automated back-office tasks

- fewer repetitive workflows

For Workers

- increased competition

- shifting job roles

- need for AI oversight skills

- fewer entry-level positions

For Society

- new regulatory demands

- ethical questions

- workforce transition challenges

Agentic AI will change how we work, what we learn, and which jobs survive automation.

❓ Frequently Asked Questions (FAQs)

Q1: Why are tech companies building fake versions of Amazon and Gmail?

To create safe, controlled environments where AI agents can learn to perform real tasks without legal, financial, or security risks.

Q2: Are these simulations identical to real platforms?

They are extremely close, but intentionally lack private data, payment systems, and sensitive information.

Q3: What kinds of tasks can AI agents learn in these copycat systems?

Browsing, ordering, replying to emails, solving problems, navigating menus, completing forms — essentially digital knowledge work.

Q4: Will these agents eventually operate real accounts on real platforms?

Yes. That is the long-term goal — though strict safety controls will be necessary.

Q5: Does this mean AI will replace office workers?

Not entirely, but many repetitive digital tasks will be automated. Human oversight roles will grow.

Q6: Are there risks in giving AI the ability to take actions instead of just answering questions?

Yes — including security issues, misalignment, unintended operations, and the potential for harmful errors.

Q7: How long before agentic AI becomes mainstream?

Likely 1–3 years for enterprise adoption, 3–7 years for widespread consumer use.

Q8: Will simulations remain part of AI training long-term?

Yes. Just like pilots train in simulators, AI will need controlled practice environments indefinitely.

✅ Final Thoughts

Silicon Valley’s creation of Amazon and Gmail copycats is more than a clever training method — it marks a turning point in AI evolution.

We are moving from AI that talks

to AI that acts

to AI that works autonomously.

These simulated digital worlds are the training grounds for AI agents that will soon manage workflows, operate business systems, and perform complex actions across industries.

The future of work is arriving earlier than expected — and it’s being shaped inside virtual versions of the tools we use every day.

Sources The New York Times