When artificial intelligence enters the conversation about healthcare, fear often follows. People imagine cold algorithms replacing compassionate doctors, machines making life-and-death decisions, and patients reduced to data points.

That fear misses what’s actually happening.

AI is not replacing doctors — it’s quietly helping them save lives.

And in a healthcare system already strained by errors, burnout, and shortages, refusing that help may be the greater risk.

What Medical AI Is Already Doing Today

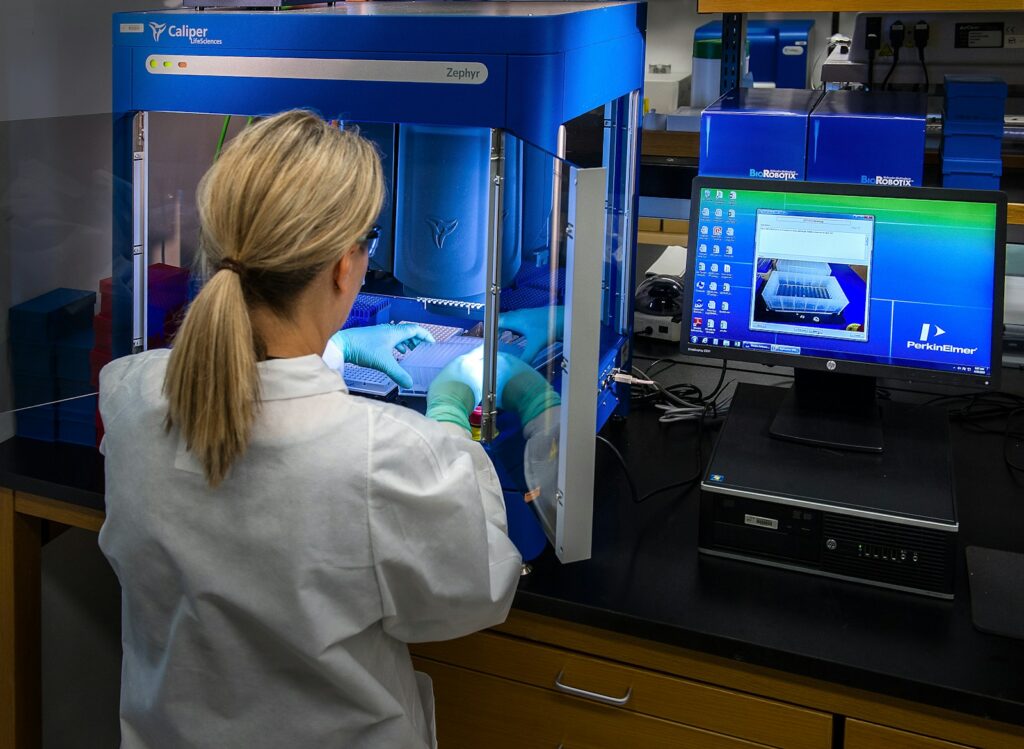

AI in healthcare isn’t theoretical. It’s already at work in hospitals, clinics, and research labs around the world.

Today, AI systems help:

- Detect cancers earlier in medical scans

- Identify strokes and heart attacks faster

- Flag dangerous drug interactions

- Predict patient deterioration in intensive care units

- Optimize hospital staffing and resource use

In many cases, AI doesn’t outperform doctors — it supports them, catching patterns humans can miss under pressure.

Why Healthcare Is a Natural Fit for AI

Modern medicine generates overwhelming amounts of data:

- Imaging and lab results

- Electronic health records

- Genomic and population health data

AI excels at spotting trends in massive datasets. It doesn’t get tired, distracted, or rushed. When paired with clinical expertise, it becomes a powerful safety net — not a decision-maker.

The Biggest Myth: “AI Will Replace Doctors”

This fear misunderstands how medical AI actually works.

AI systems:

- Do not make final diagnoses

- Do not prescribe treatment independently

- Do not assume legal responsibility

Instead, they:

- Highlight risks

- Rank possible conditions

- Suggest next steps

- Support clinical judgment

Think of AI as a second set of eyes — always alert, always checking.

Where AI Can Save the Most Lives

Earlier Detection

AI spots disease sooner, when treatment is more effective and less invasive.

Reducing Medical Errors

Fatigue and overload cause mistakes. AI helps catch them.

Expanding Access

In underserved areas, AI extends specialist-level insight to frontline clinicians.

Personalized Care

AI enables treatments tailored to individual patients rather than averages.

Why People Still Don’t Trust Medical AI

Skepticism is understandable.

Concerns include:

- Patient data privacy

- Algorithmic bias

- Lack of transparency

- Overreliance on technology

- Corporate profit motives

These are real risks — but they are governance problems, not reasons to reject AI altogether.

What Pro-AI Arguments Often Overlook

Data Quality Matters

AI trained on biased data can reinforce inequality if unchecked.

Human Oversight Is Essential

AI must assist clinicians — never replace them.

Transparency Builds Trust

Patients deserve to know when AI supports their care.

Workflow Integration Is Critical

Even accurate tools fail if poorly implemented.

AI’s Biggest Opportunity: Global Healthcare

AI’s greatest impact may come where doctors are scarce.

In low-resource settings, AI can:

- Assist diagnosis

- Prioritize urgent cases

- Support community health workers

This isn’t about replacing doctors — it’s about making limited expertise scalable.

What Responsible Medical AI Looks Like

Safe, ethical AI in healthcare requires:

- Clear human accountability

- Independent validation

- Strong data protection

- Continuous monitoring

- Informed patient consent

With these safeguards, AI becomes a layer of protection — not a threat.

Why Doing Nothing Is Also Dangerous

Healthcare already struggles with:

- Staff shortages

- Burnout

- Rising costs

- Preventable errors

Rejecting AI doesn’t preserve safety. It preserves existing failures.

The real question isn’t whether AI is perfect.

It’s whether we can afford to ignore tools that demonstrably reduce harm.

Frequently Asked Questions

Is AI making medical decisions on its own?

No. Doctors remain fully responsible for care decisions.

Can medical AI make mistakes?

Yes — which is why human oversight is non-negotiable.

Is patient data safe?

It can be, with strong regulation and security practices.

Will AI replace doctors or nurses?

No. It changes how they work, not whether they’re needed.

Should patients be told when AI is used?

Yes. Transparency is essential for trust.

Does AI worsen healthcare inequality?

It can — or it can reduce it, depending on how it’s implemented.

The Bottom Line

Medical AI isn’t about surrendering control to machines.

It’s about acknowledging a simple truth: human judgment is strongest when supported by the right tools.

Used responsibly, AI can help clinicians catch disease earlier, reduce errors, and extend care to more people than ever before. Fear shouldn’t stop us from using tools that save lives — especially when the alternative is accepting preventable harm.

The future of healthcare isn’t human or artificial intelligence.

It’s human intelligence — made safer, smarter, and more humane with AI at its side.

Sources The New York Times