Researchers are experimenting with a new kind of computer that could dramatically reduce the immense energy demands of artificial intelligence — especially generative models that create images, text, or other complex outputs. These experimental machines are known as thermodynamic computers. Instead of relying on traditional digital electronics to crunch bits, they use physical processes such as thermal noise and energy flows to perform computations—potentially slashing energy use by orders of magnitude compared with current AI hardware.

Why This Matters: The AI Energy Problem

Generative AI systems — like those that produce images or write text — have become astonishingly powerful. But that power comes with a cost: huge energy consumption. Training and running these models on conventional CPUs and GPUs consumes massive amounts of electricity, contributing to environmental impact and infrastructure strain. Traditional computer hardware expends energy mostly to control and move bits between precise 1s and 0s, leaving thermal fluctuations (heat energy) as a mostly wasted byproduct.

Thermodynamic computing flips that idea by using natural physical dynamics — especially randomness and noise — as part of the computational process itself rather than trying to suppress them.

How Thermodynamic Computing Works — The Basics

Conventional computers are digital and deterministic. They force signals into clear electrical states representing 1s and 0s, with circuits designed to eliminate noise or uncertainty. In contrast, thermodynamic computers embrace probabilities, fluctuations, and equilibrium physics:

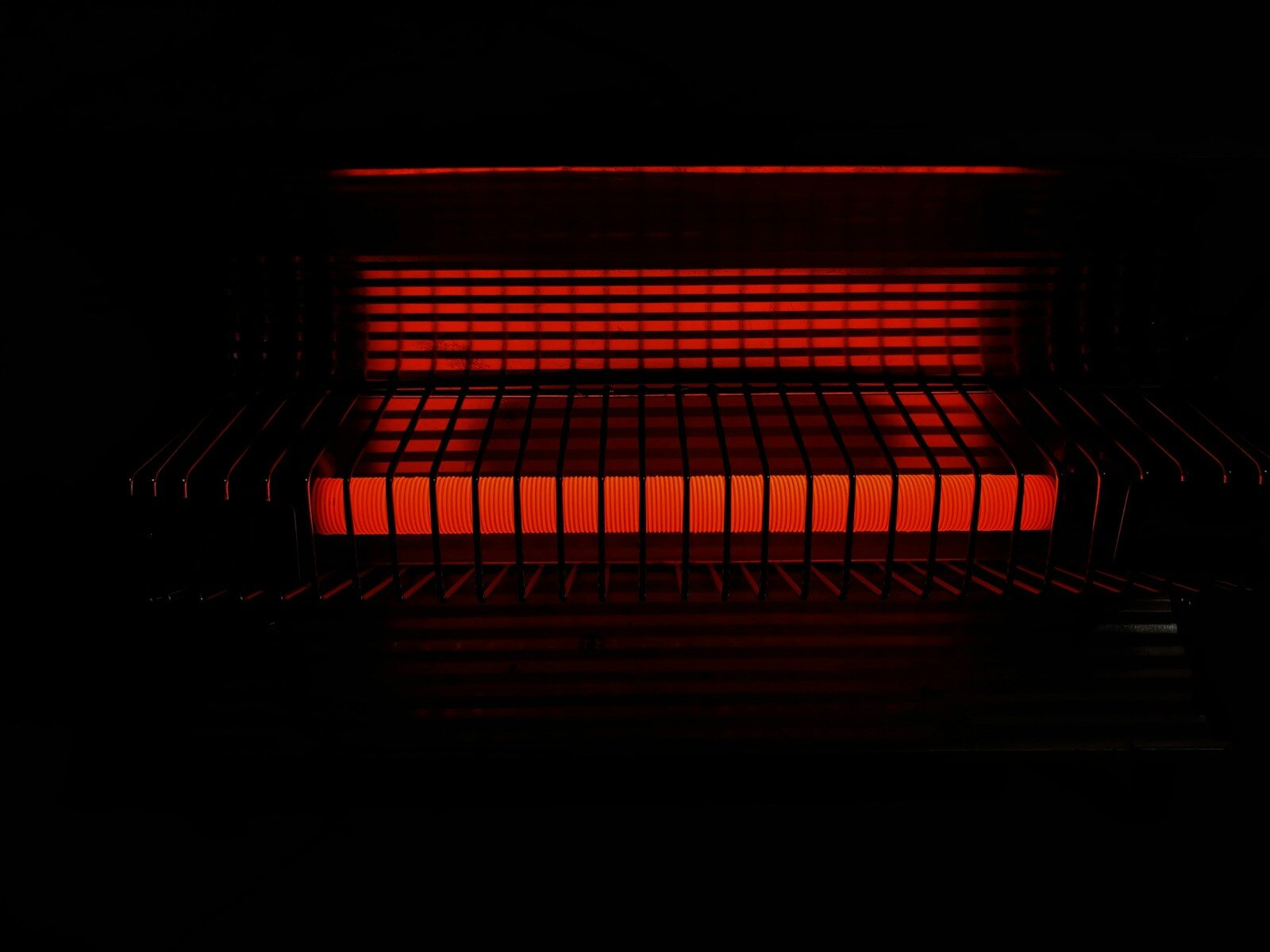

- They use physical systems — circuits, resonators, or thermal elements — that naturally evolve under the influence of thermal noise.

- Instead of forcing exact bit values, these systems drift toward equilibrium, where the final configuration encodes the solution to a problem.

- The system effectively finds answers by settling into the most probable configuration given its constraints.

Because this approach doesn’t fight against physical processes like noise, it can, in principle, perform complex mathematical tasks at much lower energy cost.

Applications Already Demonstrated

Recent studies suggest thermodynamic computing systems could generate images from noise, similar to modern AI diffusion models. In experiments, researchers demonstrated that these systems could mimic aspects of neural networks used in image generation, producing simple outputs such as handwritten digits.

The approach mirrors diffusion-based AI models that gradually remove noise to create structured images. However, instead of performing millions of digital calculations, thermodynamic systems allow physics itself to perform part of the work.

The Hardware Side: What Has Been Built So Far

Several research teams and startups are exploring hardware implementations of thermodynamic computing:

Prototype Thermodynamic Chips

Some companies have developed experimental chips that rely on probabilistic behavior and noise-based computation. These chips are designed to perform tasks like linear algebra operations more efficiently than traditional digital processors.

Analog Thermodynamic Circuits

Research laboratories have built small-scale systems where networked circuits respond to thermal fluctuations. By programming constraints into the system, the hardware settles into equilibrium states that correspond to solutions for mathematical or optimization problems.

Although promising, these systems remain in early stages and do not yet match the scale or flexibility of GPUs used in large AI models.

Scientific Foundations: Physics Meets Computation

Thermodynamic computing is rooted in statistical physics and information theory. At its core:

- Thermal noise — random microscopic motion — becomes a computational resource.

- The system operates probabilistically, similar to certain machine learning algorithms.

- The approach aligns with physical limits on computation, including the theoretical minimum energy required to manipulate information.

This represents a shift from fighting entropy to working with it.

Challenges Ahead

Despite its promise, thermodynamic computing faces significant challenges:

Scaling

Current prototypes are small and task-specific. Scaling them to support complex AI models will require breakthroughs in materials science and circuit design.

Precision

Because these systems are inherently probabilistic, maintaining consistent accuracy while leveraging randomness is a delicate engineering challenge.

Software Integration

Most AI frameworks are built for digital hardware. Adapting algorithms—or creating entirely new ones—for thermodynamic systems is an ongoing research effort.

Why This Could Change AI

If successfully developed at scale, thermodynamic computing could:

- Dramatically reduce energy use in AI data centers.

- Lower carbon emissions tied to large-scale AI workloads.

- Enable AI applications in energy-constrained environments.

- Introduce new computing architectures optimized for probabilistic reasoning.

Rather than replacing digital computers, thermodynamic systems may complement them in specialized, high-efficiency roles.

Frequently Asked Questions (FAQ)

Q: What is a thermodynamic computer?

A thermodynamic computer uses physical processes such as thermal fluctuations and energy equilibrium to perform computations, instead of relying purely on digital logic.

Q: Is this the same as quantum computing?

No. Quantum computing relies on quantum mechanical phenomena like superposition and entanglement. Thermodynamic computing operates using classical physics and thermal processes.

Q: How much energy could this save?

Some studies suggest orders-of-magnitude reductions for certain tasks, though real-world savings depend on future engineering progress.

Q: Can thermodynamic computers run large AI models today?

Not yet. They are still experimental and limited to smaller-scale or specialized tasks.

Q: When might this technology become mainstream?

It will likely take years of research and development before thermodynamic computing becomes widely deployed.

Sources Live Science