Artificial intelligence is rapidly transforming modern medicine, and nowhere is that transformation more consequential than in the operating room. AI-powered systems are now assisting surgeons with imaging, diagnostics, planning, and even real-time decision-making. Proponents argue these tools can reduce human error and improve outcomes. Critics warn that premature adoption has already led to botched surgeries, misidentifications, and dangerous overreliance on machines.

This article expands on recent investigative reporting by examining how AI is being used in surgery, what went wrong in reported failures, why these problems are emerging, what is often missing from the debate, and how healthcare systems can balance innovation with patient safety.

How AI Is Being Used in the Operating Room

AI in surgery is not a single technology. It includes a wide range of tools designed to assist — not replace — human clinicians.

Common applications include:

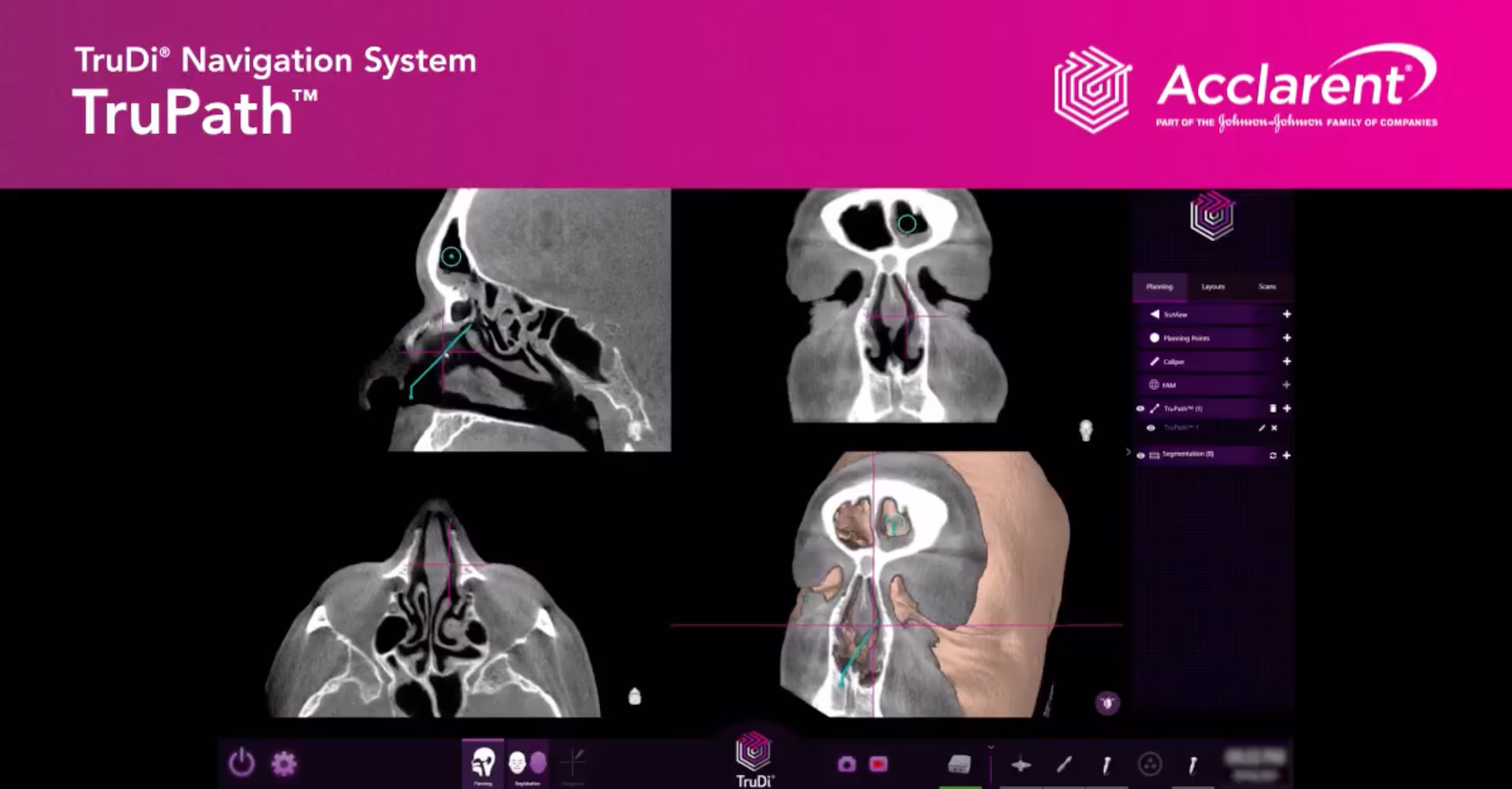

- Pre-surgical planning using AI-analyzed scans

- Image recognition systems that label organs, tumors, or blood vessels

- Robotic surgical assistance guided by AI-enhanced precision

- Predictive analytics to assess surgical risk

- Post-operative monitoring for complications

In theory, these tools can enhance accuracy and reduce fatigue-related mistakes. In practice, results have been mixed.

What the Reported Failures Reveal

Botched Surgeries and Misidentifications

Investigations have documented cases where:

- AI systems misidentified anatomical structures

- Surgical plans were generated from flawed or incomplete data

- Alerts were ignored or misunderstood by clinicians

- Human oversight was reduced because AI recommendations appeared authoritative

In extreme cases, errors led to wrong-site surgery, delayed intervention, or severe patient harm.

AI Didn’t Act Alone — Humans Were Still Involved

A critical point often missed:

AI systems do not operate independently in operating rooms.

Failures usually involved:

- Poorly trained staff

- Inadequate validation of AI tools

- Overreliance on automated outputs

- Weak institutional safeguards

The problem is less “AI replaced surgeons” and more AI was trusted too much, too soon.

Why AI Errors Are Especially Dangerous in Surgery

Medicine Has No “Undo” Button

Unlike software bugs, surgical errors:

- Cannot be rolled back

- Have immediate physical consequences

- May cause permanent harm or death

This makes tolerance for error extremely low.

AI Can Appear More Confident Than It Is

AI systems often present outputs with:

- Clean visuals

- Precise measurements

- Binary recommendations

This can mask uncertainty and discourage questioning, especially in high-pressure environments.

Training Data Has Limits

AI surgical systems are trained on historical data that may:

- Underrepresent certain body types or conditions

- Reflect outdated practices

- Contain labeling errors

When real-world cases differ from training data, performance can degrade sharply.

What’s Often Missing From the Conversation

Regulation Lags Behind Reality

Many AI tools enter hospitals through:

- Software updates

- Vendor add-ons

- “Decision support” classifications

This can allow them to bypass the level of scrutiny applied to traditional medical devices.

Hospitals Are Under Pressure to Adopt AI

Healthcare systems face:

- Staffing shortages

- Rising costs

- Competitive pressure

AI is often marketed as a solution — sometimes faster than safety frameworks can adapt.

Accountability Is Unclear

When AI contributes to a surgical error:

- Is the surgeon responsible?

- The hospital?

- The software company?

Legal and ethical frameworks have not kept pace.

The Potential Upside Still Matters

Despite the risks, AI in surgery is not inherently dangerous.

When used correctly, it can:

- Improve visualization during complex procedures

- Reduce surgeon fatigue

- Assist less-experienced clinicians

- Standardize best practices

The problem is not AI itself, but how it is introduced and governed.

How Safer AI in Surgery Can Be Achieved

Key safeguards include:

- Rigorous clinical trials before deployment

- Transparent disclosure of AI limitations

- Mandatory human override authority

- Continuous performance monitoring

- Clear liability standards

- Training programs focused on critical thinking, not blind trust

AI should function as a second opinion, not an unquestioned authority.

What This Means for Patients

Patients increasingly need to ask:

- Is AI being used in my procedure?

- How experienced is the surgical team with this technology?

- What happens if the AI is wrong?

Informed consent must evolve to reflect AI’s role in care.

Frequently Asked Questions

Is AI performing surgeries on its own?

No. AI assists surgeons but does not independently operate on patients.

Are AI-assisted surgeries unsafe?

Not inherently. Many are successful, but risks increase when systems are poorly tested or overtrusted.

Why are errors happening now?

Because adoption is accelerating faster than regulation, training, and oversight.

Can AI improve surgical outcomes?

Yes — when used cautiously, transparently, and with strong human oversight.

Who is responsible if AI contributes to a mistake?

Responsibility is currently shared and legally unclear, which is a major unresolved issue.

Final Thoughts

AI’s entry into the operating room represents both extraordinary promise and serious danger.

Used responsibly, it can help surgeons save lives. Used recklessly, it can amplify mistakes with devastating consequences. The lesson from recent failures is not to abandon AI in medicine — but to slow down, demand evidence, and remember a fundamental truth:

In healthcare, intelligence — artificial or human — must always be matched with humility, accountability, and care.

Because in the operating room, innovation isn’t just about what’s possible.

It’s about what’s safe.

Sources Reuters